Keeping Bad Leads Out of Your Database: The 1-10-100 Rule Explained

In the realm of B2B marketing, data isn’t just king; it’s the entire kingdom. Yet, managing this vast empire of information can feel like navigating a minefield, where one wrong step (or click) can have costly repercussions. There is a reason why 4 in 10 marketers are not confident in the quality of data in their marketing automation tool and CRM. This is where understanding and applying the 1-10-100 rule becomes crucial for marketers aiming to reign supreme over their data and not be dethroned by it.

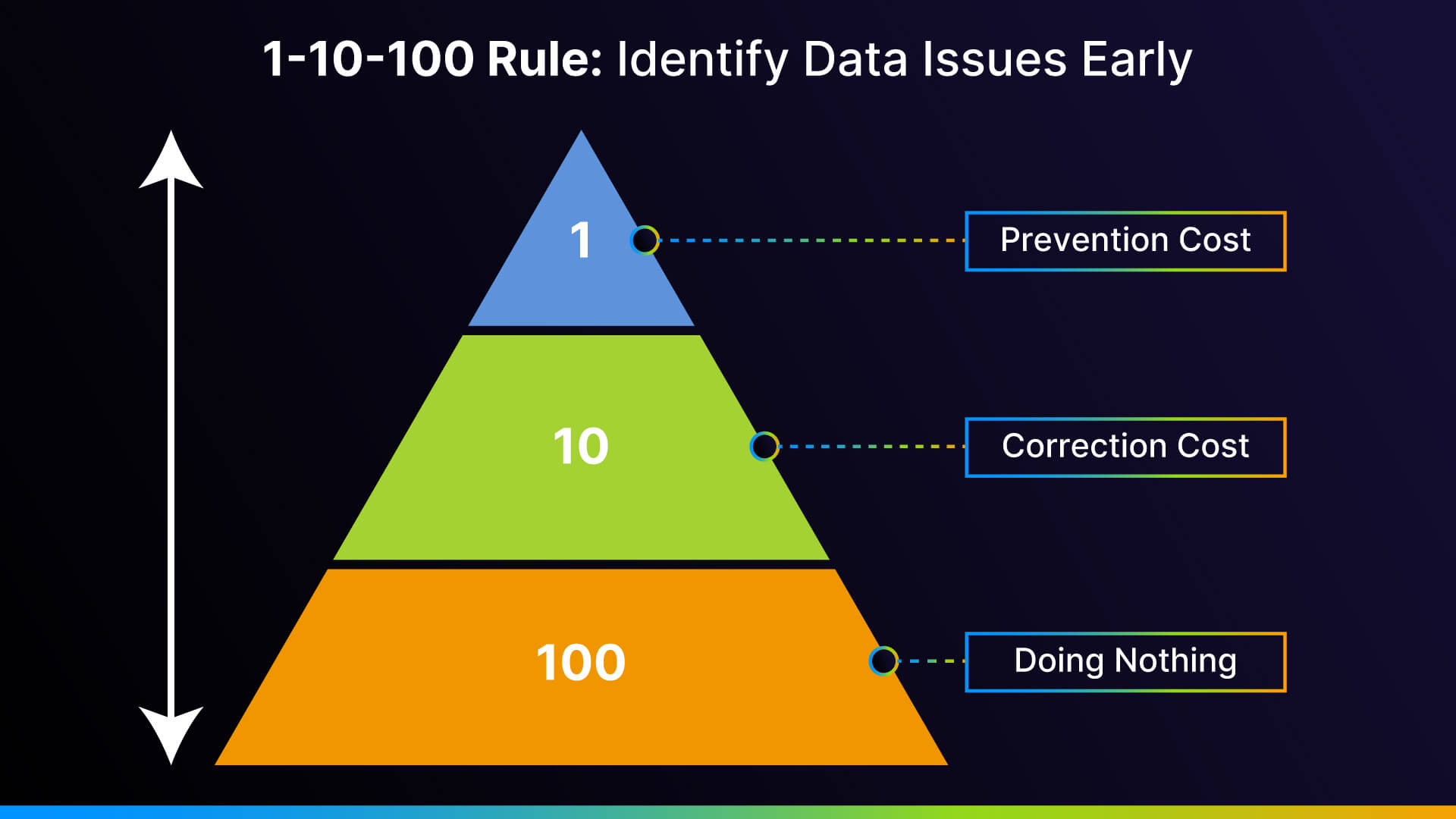

What is the 1-10-100 Rule?

Originally stemming from quality management, the 1-10-100 rule has found its rightful throne in the domain of data quality management. It posits that it costs $1 to assure the accuracy of data at the point of entry, $10 or more to locate and correct it later, and $100 and possibly much more, per record if left uncorrected. Considering the downstream impacts of bad data on business operations and possible compliance violations, the negative impact can be staggering. In essence, the rule highlights the exponential cost of neglecting data quality upfront.

The High Cost of Poor Data Quality

The consequences of inadequate data quality are profound, stretching beyond financial repercussions, which tally up to $3.1 trillion yearly in the US economy alone. For B2B marketers, the impact of poor data is particularly severe, resulting in futile marketing efforts, flawed buyer personas, and, worst of all, a damaged brand image. This not only squanders vital resources, such as time and money, but also diminishes the morale of sales and marketing teams as they grapple with the fallout of incorrect or obsolete data. Learn more about the far-reaching impacts of poor data quality in our latest blog post .

Keeping Bad Leads Out is Your Best Strategy

Too often, experts pile up remedies and fixes to tackle the issue of bad data once it’s infiltrated your system. However, this reactive approach is akin to locking the barn door after the horse has bolted. The crux of the matter, and where marketing operations executives should laser-focus their efforts, is preventing bad data from entering the system in the first place.

This proactive strategy transcends mere damage control; it epitomizes smart data governance. By prioritizing data quality at the point of entry, businesses sidestep the costly, labor-intensive processes required to clean, correct, and mitigate the repercussions of substandard data. In essence, the most efficient solution to data quality woes isn’t found in elaborate cleanup operations—it lies in erecting a robust, impenetrable barrier against bad data from the outset.

Technologies and Strategies for Data Defense

1. Data Governance Tools

These are your first line of defense. Implementing robust data validation at the point of entry ensures only clean, accurate data makes it into your system. Tools that verify email addresses, phone numbers, and other critical fields in real time can drastically reduce the incidence of bad data.

Integrating data governance tools not only serves as an effective barrier against poor quality data; it is unquestionably the most economical solution. This approach directly aligns with the $1 rule highlighted earlier, emphasizing that prevention is far less costly than the cure.

2. Data Governance Frameworks

Establishing clear policies and procedures around data management creates a culture of accountability and precision. A strong governance framework ensures that data quality is not just an IT concern but a company-wide priority.

3. AI and Machine Learning

Artificial Intelligence and Machine Learning algorithms are game changers in predicting and identifying data anomalies before they become entrenched problems. These technologies learn from historical data patterns to flag inconsistencies, offering a dynamic solution to data management.

4. Comprehensive Training Programs

Human error is a significant contributor to data corruption. Comprehensive training programs for staff on the importance of data accuracy and how to input data correctly are crucial. An informed team is a vital asset in your quest to eliminate bad data.

Conclusion

In the high-stakes world of B2B marketing, maintaining pristine data quality is more than a best practice—it’s a lifeline to operational efficiency and marketing efficacy. The 1-10-100 rule underscores the critical, yet often overlooked, concept that preventing bad data at the point of entry saves exponential costs and resources down the line, compared to rectifying it later. By harnessing a suite of defensive technologies and strategies, businesses can secure their data processes against the costly incursions of bad leads, thereby protecting their bottom line and enhancing their market standing.